OpenInsight LinearHash Service and High Server Memory Usage

The rule of thumb when it comes to the Universal Driver’s LinearHash service and server performance is to have enough free physical RAM available on the server to fit all the commonly accessed OpenInsight database files (the LK and OV files managed by the LinearHash service) into memory. This article walks through the problems associated when requests for commonly accessed LinearHash files exceed the amount of available server memory.

The Importance of Free RAM

Starting with Server 2008 x64, Windows is more aggressive about using free physical memory as cache to speed up disk I/O. The MSDN Blog Article The Memory Shell Game is an excellent read to understand how Windows utilizes memory. The article states:

Typically, large sections of [unused] physical RAM are used for file cache. This cache is necessary to improve disk performance. Without it, disk I/O would make the system crawl along at an nearly unusable pace. The file system cache is just like a working set for a process.

Behind the scenes Windows is speeding up disk access by keeping files read from disk in unused memory.

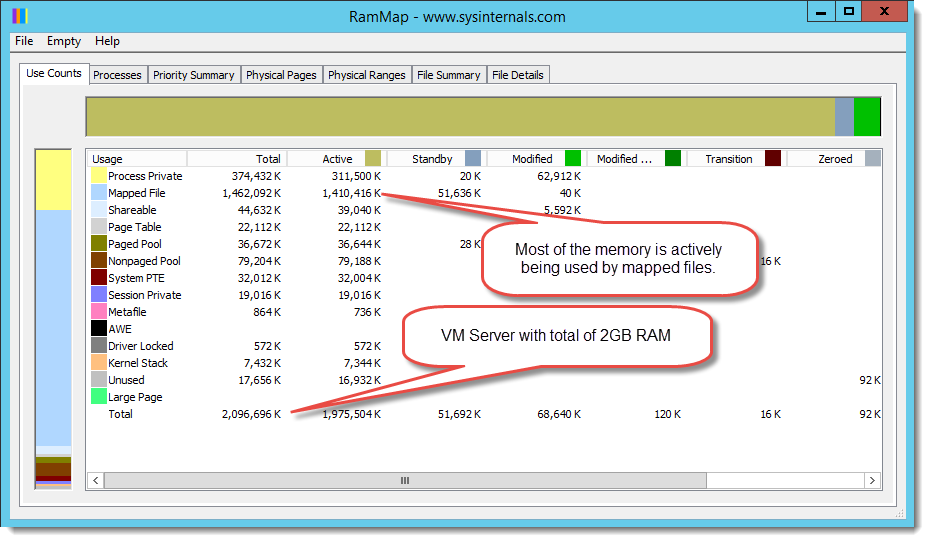

We can see this in action by using the Windows Sysinternals RAMMap utility. The screen shot below is on a VM server with 2 GB of assigned memory dedicated to the LinearHash service:

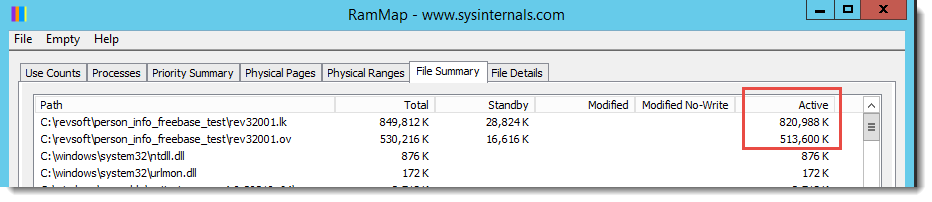

Most of the memory is actively being used by the Mapped File, which is the memory classification used for files ‘mapped’ into memory for faster access. We can prove the LinearHash service is the source of the high memory usage by viewing the File Summary tab and noting the file names with data in memory:

The LK/OV files in question are over 8 GB and Windows is attempting to keep as much of the file in active memory as possible to improve the LinearHash service’s performance when accessing the file.

Who Does the Caching?

If you’ve ever looked at the memory usage of the LinearHash service (LH47srvc.exe or RevLHSrvc64nul.exe process) in the server’s task manager, you’ll see the memory usage is usually less than 100 MB. The LinearHash service doesn’t use memory as a cache to minimize the need to access the slower disk. Behind the scenes Windows Server is watching the LinearHash service access the LK/OV files on disk and assigning the frequently accessed file sections to memory. This is evident from the screen shots in the previous section.

Once Windows loads a file into memory as a mapped file, anecdotal experience demonstrates that even the most badly hashed file on the slowest disk can perform lightening fast.

Typically a database has built-in caching mechanisms which are aware of information usage and context. This enables the database to intelligently keep important information in memory. Since the LinearHash service doesn’t perform caching, it defers this to Windows…which isn’t aware of the context or importance of the information it’s caching. Windows can’t determine which information is more important to cache and as a result it tries to cache everything possible.

The Problem – What to Cache?

When the frequently accessed sections of a database grows larger than the server’s available free RAM capacity, Windows has to start paging data in and out of RAM to fulfill requests for file sections or to run other programs requesting memory. This can result in sluggish or unresponsive server operations and slower than normal database responses because there isn’t enough free (or available) memory to function efficiently. On a heavily loaded server this can bring the server to a crawl resulting in unacceptable performance.

The Solution

Adding more memory is always a solution but not always practical. This is especially true for large databases that have very random access patterns and whose size far exceeds the amount of memory that can be added to a server. So what can be done?

The Universal Driver 5.0.0.5 and 4.7.2 have a new undocumented registry configuration setting named CreateFileFlags which, according to a recent Sprezzatura blog article, is used by the LinearHash service when opening LK and OV files. From the article:

The service expects that, if this registry entry exists, it has a DWORD value. If it’s not set, its default value is the value associated with:

FILE_ATTRIBUTE_NORMAL | FILE_FLAG_RANDOM_ACCESS | FILE_FLAG_OVERLAPPED

(which evaluates to 0x50000080)So the developer can now ensure that writes are passed straight through to the disk and not cached. See the Microsoft documentation here.

With this new configuration option, Revelation Software has opened up the possibility to deal with high memory usage. Microsoft also provides additional guidance in its support document; performance degrades when accessing large files with FILE_FLAG_RANDOM_ACCESS. This KB article suggests removing the FILE_FLAG_RANDOM_ACCESS indicator when opening large files for random access I/O. We can now do this by setting the CreateFileFlags.

Applying the Solution

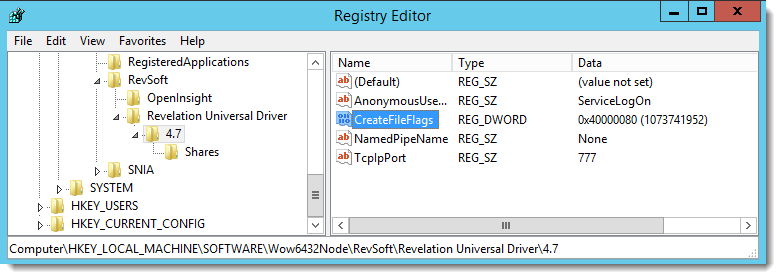

On the server we begin by creating a new DWORD registry entry named CreateFileFlags in:

Universal Driver 4.7 HKEY_LOCAL_MACHINE\SOFTWARE\Wow6432Node\RevSoft\Revelation Universal Driver\4.7

Universal Driver 5.0 HKEY_LOCAL_MACHINE\SOFTWARE\Revsoft\Revelation Universal Driver\5.0

The value should be HEX 0x40000080 which evaluates to opening files with these flags:

FILE_ATTRIBUTE_NORMAL | FILE_FLAG_OVERLAPPED

After making the change shown in the screen shot and restarting the LinearHash service, we can check the results when clients start accessing the database.

Checking the Results

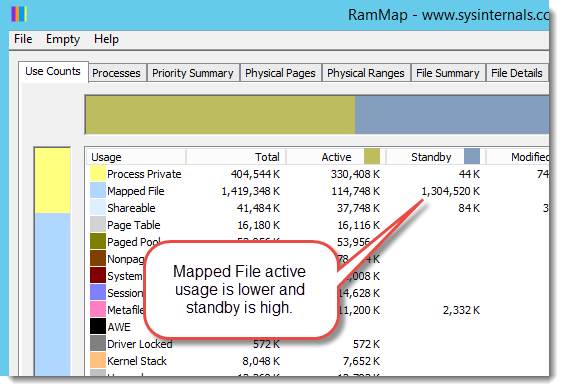

The screen shot below still shows high memory usage but Windows has assigned most of the Mapped File memory usage to Standby memory instead of Active memory:

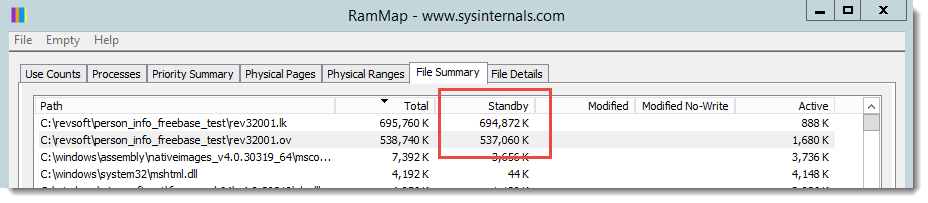

Checking the File Summary tab reveals that the LK and OV files are still loaded in memory but the contents are held in Standby memory instead of Active memory:

Standby Verses Active Memory

After the change, RAMMap shows Active mapped file memory is low and Standby mapped file memory is high. Isn’t this still bad? We still have high memory usage. What’s going on? If we refer back to the MSDN article, it classifies Standby memory as:

Standby pages – This is a page that has left the process’ working set. This page is in physical RAM…It is still associated with the process, but not in its working set [i.e., actively used memory]…That page also has one foot out the door. If another process or cache needs more memory, the process association is broken and it [i.e., standby memory] is moved to the free page list. Most of the pages in available memory are actually standby pages. This makes sense when you realize that these pages can be quickly given to another process…and can be quickly given back if the process [e.g., the LinearHash service] touches the page again. [As a result, the] vast majority of available memory is not wasted or empty memory.

In summary, Standby memory is data in RAM, but not marked as actively used. It is readily available to the original process that requested it. Thus, Windows can either give it back to the LinearHash service or free the memory and assign it to another process.

If the server starts new processes or needs more memory for other tasks, it knows that the Standby memory can be used for other purposes instead of having to page data in and out of RAM.

Conclusion

High memory usage alone isn’t bad. It is a sign that the server’s resources are being fully utilized. However, excess paging and the resulting slow performance can be unacceptable. Removing the FILE_FLAG_RANDOM_ACCESS will help Windows better utilize the available resources by keeping less of the OpenInsight database files in Active memory by moving them to Standby memory where it can be easier to allocate to other tasks when memory is low.

The impact to LinearHash performance depends greatly on the amount of commonly accessed data the server can keep in memory and may be the target of future blog articles.

Nice work!

Great article Jared. Sorry I’m late to the party but I literally just received and inquiry from IT regarding RAMMap and the ‘Mapped File’ issue. A quick search of RAMMap and OpenInsight brought me here! What a time saver. Thank you.

That said, in addition to us having the exact scenario outlined above; 4 of our 4000 files (ALL .ov ) are consuming 75% of the ‘Mapped File’.

My concern is that the 2 largest .ov’s are 3.2 and 1.9 Gb respectively. Obviously these files need to be resized immediately so that more data is in .lk not .ov (and with the appropriate frame separation to allow a standard deviation as close to 1 as possible) but will that really make a significant difference given how everything is in physical memory already so there really is no ‘disk spinning’ all over the place to read in the .ov tracks and frames?

I am wondering, if I just resize will I see significant results without removing the FILE_FLAG_RANDOM_ACCESS vs. just removing the FILE_FLAG_RANDOM_ACCESS vs. doing both?

Thank you!

>>When the frequently accessed sections of a database grows larger than the server’s available free RAM capacity, Windows has to start paging data in and out of RAM

How do you know if this is happening?

The tools referenced in this article help to answer that question. Therefore, I’m guessing you are more wondering how one would suspect this is happening in the first place?

Correct

Performance issues. If your physical tables aren’t of the magnitude of size that are being discussed in this article, then it might not be an issue. Bottom line: unless you want to proactively analyze your system, this is something you would simply look into when the system isn’t performance as well as you would expect.